Here's a link to all the files pertaining to our project!

KiNaomatics

This project was a lot of fun, and perhaps we'll update this blog some more in the future...

Tuesday, May 8, 2012

Friday, April 27, 2012

KiNaomatics: An Abstract

KiNaomatics

Spencer Lee and William McDermid

Group Pichu

Abstract:

Humanoid robots have many applications ranging from the fun to the incredibly useful. Humanoid robots are currently programmed to play soccer against each other autonomously, and much work is currently being done to find other, more helpful uses for them as well. Humanoids are ideal automatons for navigating a world built for humans, and there is a lot of research going into developing artificial intelligence to control them. However, there are many applications of humanoids entirely separate from the realm of artificial intelligence.

Introducing KiNaomatics: Taking small humanoids where they haven’t gone before--into YOUR shoes! Using data taken from a Microsoft Kinect, we compute the joint angles of the tracked human’s upper torso, then map these angles to the servos in an Aldebaran Nao’s upper torso. This effectively allows the human to control the Nao robot’s arms simply by moving. We also track the user’s position in free space to command a ZMP-based walk engine onboard the robot.

Monday, April 23, 2012

Behind Schedule

Well, unfortunately, we did not reach our baseline by Wednesday night like we expected. Furthermore, having spent Thursday-Sunday at the US Open for Robocup, we haven't been able to get much done. Tonight, however, we began looking into interpreting the joint orientations with OpenNI using a 3x3 rotation matrix. The left column of the rotation matrix represents the joint's position with respect to the x-axis, the middle column represents the orientation with respect to the y-axis, and the right column represents the orientation with respect to the z-axis. We plan on utilizing this orientation data to control the head movements of the Nao, to improve our control over the arms, and to allow us to control the robot's walking direction.

Furthermore, we plan to stream the video from the Nao's cameras wirelessly. We want to enhance the "virtual reality" aspects of our project, and being able to see what the robot sees would help accomplish this.

Bad news: the BeagleBoard still isn't playing nice with the Kinect. We think there may be a problem with the ARM compiled version, so we're going to attempt to alter the compilation of the x86-Release version, as was done in the past, before the ARM version was available.

Furthermore, we plan to stream the video from the Nao's cameras wirelessly. We want to enhance the "virtual reality" aspects of our project, and being able to see what the robot sees would help accomplish this.

Bad news: the BeagleBoard still isn't playing nice with the Kinect. We think there may be a problem with the ARM compiled version, so we're going to attempt to alter the compilation of the x86-Release version, as was done in the past, before the ARM version was available.

Wednesday, April 18, 2012

Let's ignore the BeagleBoard for now.

Well, installing an older Ubuntu distribution ended up being a bust. The older distro would not boot, so we decided to reinstall the latest revision (r7) of 11.10 for ARM. Once again, we've set the BeagleBoard up again, compiled everything, and are ready to test the Kinect. However, we've been to busy actually making progress to do this.

We define "actually making progress" like this:

Since our last post, we've begun development on a laptop running 32-bit Ubuntu 10.04 with the latest version of OpenNI (unstable), manctl SensorKinect (unstable), and the NITE middleware (unstable). This configuration worked perfectly, first try. Using the NiSimpleSkeleton sample as a launchpad, we were able to use the joint positions detected by OpenNI to calculate the joint angles we wanted. We are also able to serialize an array containing our calculated joint angles, then wirelessly transmit this information to the robot, where the data is deserialized.

Our plan is to reach our baseline goal of basic mimicry (shadowing arm/shoulder movement) and basic walking control by TONIGHT. We leave tomorrow at 5:30 am for the Robocup US Open in Portland, Maine.

We define "actually making progress" like this:

Since our last post, we've begun development on a laptop running 32-bit Ubuntu 10.04 with the latest version of OpenNI (unstable), manctl SensorKinect (unstable), and the NITE middleware (unstable). This configuration worked perfectly, first try. Using the NiSimpleSkeleton sample as a launchpad, we were able to use the joint positions detected by OpenNI to calculate the joint angles we wanted. We are also able to serialize an array containing our calculated joint angles, then wirelessly transmit this information to the robot, where the data is deserialized.

Our plan is to reach our baseline goal of basic mimicry (shadowing arm/shoulder movement) and basic walking control by TONIGHT. We leave tomorrow at 5:30 am for the Robocup US Open in Portland, Maine.

Sunday, April 15, 2012

This week, we ran into some problems with our BeagleBoard setup. Unfortunately, we found that we couldn't get OpenNI to work on the version of Ubuntu we had downloaded (Ubuntu version 11.10). We kept running into an error claiming that the "USB interface was not supported."

We tried a few alternatives at this point. First we installed Libfreenect, an open source set of drivers for the kinect. We found that Libfreenect worked where OpenNI would not; however, we soon found out that Libfreenect did not support the advanced skeleton tracking features we would need to get anywhere with our project.

At this point, we wiped the micro SD card which hosted our Beagleboard's operating system and installed a 10.10 distribution, but the Beagleboard would not boot with this distribution. As I write this post, we are installing a freshly patched version of Ubuntu 11.10; fingers crossed!

In the meantime, we're going to develop our skeleton tracking code for use on the Beagleboard on a laptop running Ubuntu 10.04.

We tried a few alternatives at this point. First we installed Libfreenect, an open source set of drivers for the kinect. We found that Libfreenect worked where OpenNI would not; however, we soon found out that Libfreenect did not support the advanced skeleton tracking features we would need to get anywhere with our project.

At this point, we wiped the micro SD card which hosted our Beagleboard's operating system and installed a 10.10 distribution, but the Beagleboard would not boot with this distribution. As I write this post, we are installing a freshly patched version of Ubuntu 11.10; fingers crossed!

In the meantime, we're going to develop our skeleton tracking code for use on the Beagleboard on a laptop running Ubuntu 10.04.

Monday, April 9, 2012

Status Update: Week 1

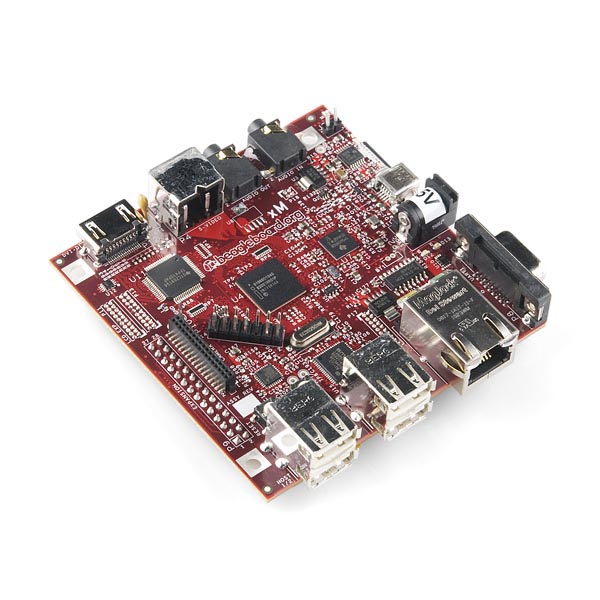

Thus far, we've set up most of the infrastructure of our project. We have our BeagleBoard-xM set up with OpenNI and the sensor drivers. Lua is also installed on the BeagleBoard, as much of our project will be programmed using Lua. Hopefully, our Microsoft Kinect will arrive today, and we will be able to begin implementing skeleton tracking.

In order to allow the BeagleBoard and the Aldebaran Nao to communicate, we will be using a Cisco WRVS4400N V2 router that I had laying around. The BeagleBoard will be connected to the router via an ethernet cable, and the Nao will communicate with the router via wireless connection. So far, we have merely established connectivity between the Nao and the BeagleBoard, but actually sending parsable data will be more of a challenge.

The primary components of our project (so far):

In order to allow the BeagleBoard and the Aldebaran Nao to communicate, we will be using a Cisco WRVS4400N V2 router that I had laying around. The BeagleBoard will be connected to the router via an ethernet cable, and the Nao will communicate with the router via wireless connection. So far, we have merely established connectivity between the Nao and the BeagleBoard, but actually sending parsable data will be more of a challenge.

The primary components of our project (so far):

|

| Microsoft Kinect |

|

| Cisco WRVS4400N V2 |

|

| BeagleBoard-xM |

Friday, March 23, 2012

Introducing KiNaomatics!

Soccer is a growing sport in the world of robotics, with the Robocup competition being held each year in different areas of the world. By 2050, they say, these soccer playing robots are going to be able to beat the champions of the World Cup.

Well, as of now, they're pretty pathetic.

While we can, we thought, why not play against these robots at their own level? KiNaomatics seeks to accomplish just that! Through the advanced image processing capabilities of the Microsoft Kinect, we aim to put a human in a robot's shoes and allow him to play against the native AI.

|

| Nice try, little guy. |

Subscribe to:

Posts (Atom)

.jpg)

.jpg)

.jpg)

.jpg)